Also published on Medium.

Yes, those days are long gone when you could come up with those excuses. Whether or not you work in the software development industry, you should have, by now, heard of Docker even if you have the slightest connection with the development trend. If not, check out why it’s so darn popular! And if you’re still asking yourself why you, being a software engineer/developer, need to spare substantially less time to learn a DevOps tool - I'd say, being a stubborn developer is not a good thing. It never was.

Well, in short, Docker is a software container platform. You can create containerized applications, automate the deployment and have fun! Packing, shipping, and running — made simpler, easier & definitely, faster! With Docker, applications can comfortably run no matter where they are. Using Docker enables you to package your entire application with all of its dependencies with zero headaches for compatibility issues or machine dependency.

To understand Docker, let’s have a quick look at some basic jargon in a minute or less.

Virtual Machines (VM)

In the simplest of its contexts, VM is nothing but an emulation of a real computer that can execute programs of sorts. And Hypervisor, also known as a virtual machine monitor or VMM, is basically what Virtual Machines run on top of.

A virtual machine is a program on a computer that works like it is a separate computer inside the main computer. The program that controls virtual machines is called a hypervisor and the computer that is running the virtual machine is called the host.

Docker Containers

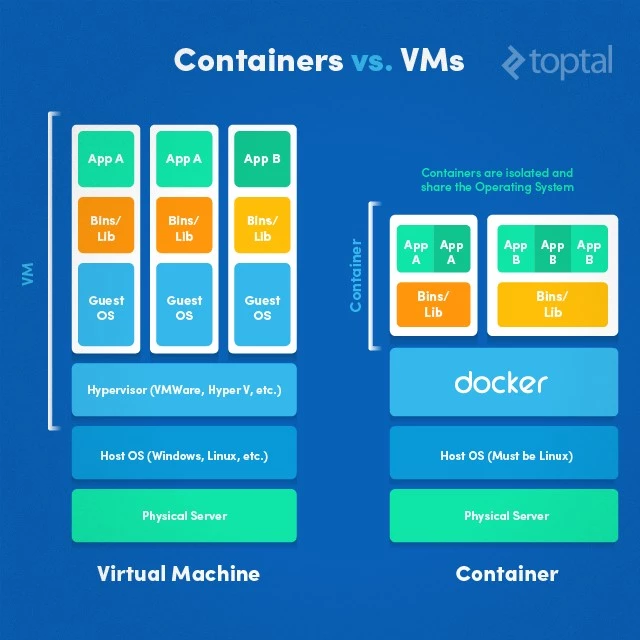

The concept of Docker containers, similar to that of VM, also aims to achieve isolation in terms of applications and programs. Containers, as the term suggests, “contains” every little thing that an application may need to be executed. Think of it as a transportable box, filled with tools and libraries and settings and all that’s required for the execution. So you may ask how the concept of Docker is any different than that of VM and in that case, take a look at this one:

Evidently enough, containers don’t require a full instance of OS, allowing multiple containers to run smoothly on a single host machine. Since containers share the host system’s kernel with other containers, unlike VM, it actually offers OS-level virtualization. Means, the processes inside the containers are as “native” as the actual native processes running on the host.

How to generate one?

Now that we know what a docker container really is, let’s talk about the process for docker container generation.

In one line, dockerfile builds a docker image, from which you can run docker container.

A dockerfile is a simple text file, with lines of instructions on how to build a docker image. So it’s basically a blueprint for images, that’s all. And images are blueprints for containers, similar to Class in Object Oriented Programming.

Not Interested in Generating One By Yourself?

Well, then there’s Docker Hub for you, the GitHub for docker images! Use this online cloud repository to search a pre-built image, pull it down & use it right away!

Let’s Write a Simple docker file

First, install docker by following the simple instructions from here. Then, let’s have a super quick look at some of the most frequently used docker file commands and what they actually mean, pay close attention to the comments with each command in the following:

# FROM defines the base image, means it defines from what image we want to build it from

# Create a layer from the node:8 Docker Image

FROM node:8

# A LABEL is a key-value pair, used to store information

# Set one/multiple labels on one/multiple lines

LABEL com.example.version="0.1.1-beta" com.example.release-date="2018-12-25" com.example.version.is-production=""

LABEL vendor1="Medium Incorporated"

# Create the app directory for storing, running package manager and launch app

WORKDIR /usr/src/app

# Copy app dependencies

# from both package.json AND package-lock.json

# to the root of the docker image directory created above

COPY package*.json ./

# Install app dependencies

RUN npm install

# For production, the command should be

# RUN npm install --only=production

# Bundle app source inside Docker image

COPY . .

# EXPOSE informs Docker that the container listens on the specified network ports at runtime

# provide access to this isolated docker container

EXPOSE 8080

# Lastly, define the command to run the app

CMD [ "npm", "start" ]Note: You can also copy-paste this GitHub Gist

This is a sample dockerfile with some of the basic commands for a Node app, which we’ll use, build and run to dockerize a NodeJS app in later parts of this series. Check out all the available commands here and follow this link to learn best practices for writing these dockerfiles.

What’s Next?

Go through the official docs and get started with developing your applications on Docker. But after spending a significant amount of time with docker containers, you’re more likely to see yourself & your team face scalability & performance issues where you may have to create a dozen of new microservices to support another dozen of the new requirements from clients. For that, you may need to deploy those containers across a cluster of physical/virtual machines, which will eventually lead you to “container orchestration” to automate deployment, scaling and monitoring of the availability of container-based applications!

There are several orchestration systems for Docker containers and Kubernetes is, undeniably, one of the best in the game. Docker also has its native container management tool, Docker Swarm.

Kubernetes or Docker Swarm?

Do watch this simple explanation video by IBM to have a clear understanding of why and when to use Kubernetes to utilize its power which allows you to take advantage of your existing docker workloads & run them at scale. If you’re already working with Docker, Kubernetes is a logical next step for managing your workloads.

Happy Dockerizing! ❤